About me

I’m a third year Ph.D. student in the MURGe-Lab at the University of North Carolina at Chapel Hill, advised by Prof. Mohit Bansal. Prior to joining UNC, I received my master’s degrees in Computer Science and Financial Engineering from Columbia University, where I was a member of the DVMM Lab advised by Prof. Shih-Fu Chang and a member of the ROAM Lab advised by Prof. Matei Ciocarlie and Prof. Shuran Song. I also work closely with Prof. Krzysztof Choromanski. Besides, I’ve been working as a research scientist intern in the JEPA team at Meta FAIR Lab, and Media Generation Team at Meta Superintelligence Labs.

My recent research focuses on generative models, multimodal learning, and LLMs. I’m also broadly interested in theory-grounded algorithms for efficient machine learning.

You can find my CV here.

Feel free to email/wechat me if you would like to chat about any research ideas! :)

Publications

2025

(Preprint 2025) Exploring MLLM-Diffusion Information Transfer with MetaCanvas

Han Lin, Xichen Pan, Ziqi Huang, Ji Hou, Jialiang Wang, Weifeng Chen, Zecheng He, Felix Juefei-Xu, Junzhe Sun, Zhipeng Fan, Ali Thabet, Mohit Bansal, Chu Wang

[Paper][Project Page]

(NeurIPS 2025) Bifrost-1: Bridging Multimodal LLMs and Diffusion Models with Patch-level CLIP Latents

Han Lin, Jaemin Cho, Amir Zadeh, Chuan Li, Mohit Bansal

[Paper]

(ICLR 2025) VEDiT: Latent Prediction Architecture for Procedural Video Representation Learning

Han Lin, Tushar Nagarajan, Nicolas Ballas, Mido Assran, Mojtaba Komeili, Mohit Bansal, Koustuv Sinha

[Paper]

(ICLR 2025 Oral) CTRL-Adapter: An Efficient and Versatile Framework for Adapting Diverse Controls to Any Diffusion Model

Han Lin*, Jaemin Cho*, Abhay Zala, Mohit Bansal

[Paper][Project Page]

2024

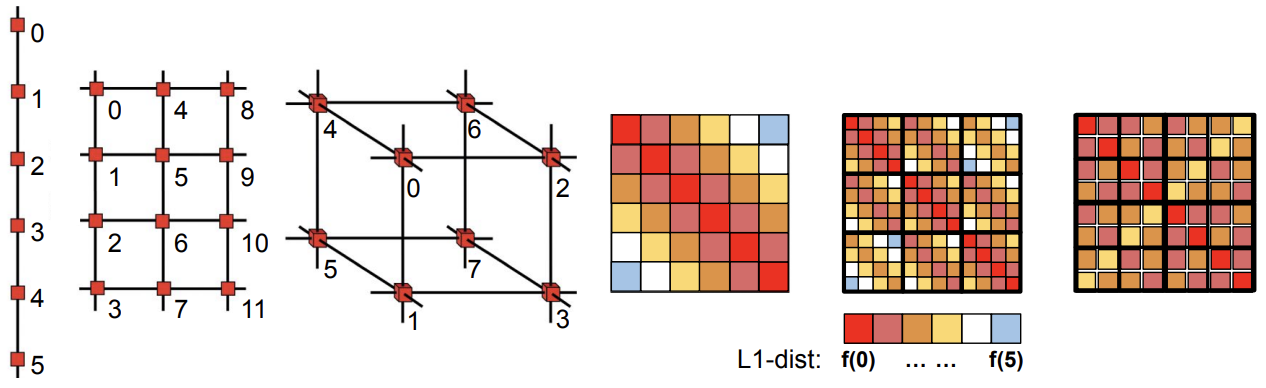

(NeurIPS 2024) Fast Tree-Field Integrators: From Low Displacement Rank to Topological Transformers

Krzysztof Choromanski, Arijit Sehanobish, Somnath Basu Roy Chowdhury, Han Lin, Avinava Dubey, Tamas Sarlos, Snigdha Chaturvedi

[Paper]

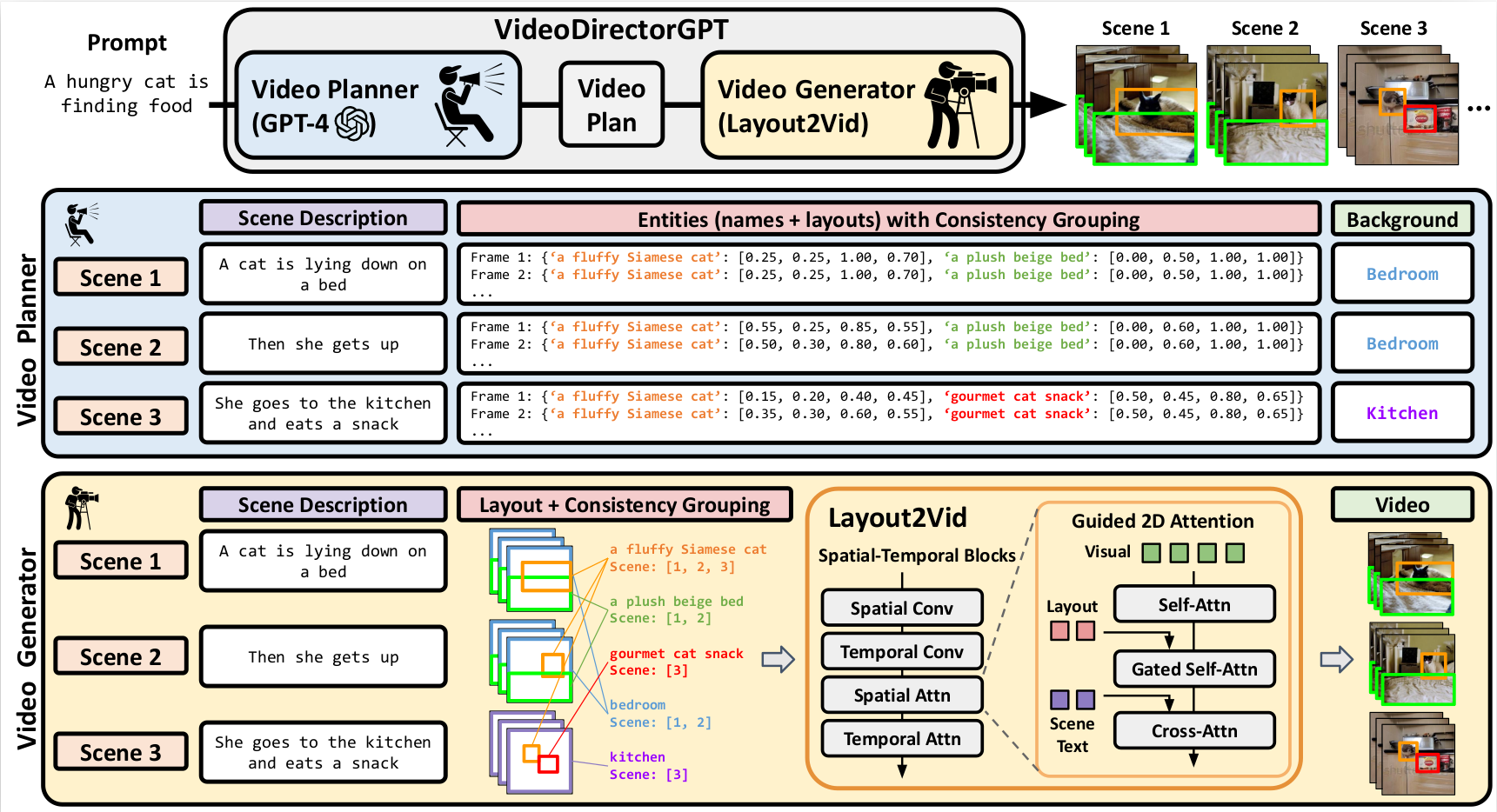

(COLM 2024) VideoDirectorGPT: Consistent Multi-Scene Video Generation via LLM-Guided Planning

Han Lin, Abhay Zala, Jaemin Cho, Mohit Bansal

[Paper][Project Page]

(COLM 2024) EnvGen: Generating and Adapting Environments via LLMs for Training Embodied Agents

Abhay Zala*, Jaemin Cho*, Han Lin, Jaehong Yoon, Mohit Bansal

[Paper][Project Page]

(COLM 2024) DiagrammerGPT: Generating Open-Domain, Open-Platform Diagrams via LLM Planning

Abhay Zala, Han Lin, Jaemin Cho, Mohit Bansal

[Paper][Project Page]

2023

(ICML 2023) Efficient Graph Field Integrators Meet Point Clouds

Krzysztof Choromanski*, Arijit Sehanobish*, Han Lin*, Yunfan Zhao*, Eli Berger, Alvin Pan, Tetiana Parshakova, Tianyi Zhang, David Watkins, Valerii Likhosherstov, Somnath Basu Roy Chowdhury, Avinava Dubey, Deepali Jain, Tamas Sarlos, Snigdha Chaturvedi, Adrian Weller

[Paper]

(CVPR 2023) Supervised Masked Knowledge Distillation for Few-shot Transformers

Han Lin*, Guangxing Han*, Jiawei Ma, Shiyuan Huang, Xudong Lin, Shih-Fu Chang

[Paper][Code][Slides]

(ICRA 2023) Active Tactile Exploration for 3D Object Recognition

Jingxi Xu*, Han Lin*, Shuran Song, Matei Ciocarlie

[Paper][Project Page][Video]

2022

(ICML 2022) From block-Toeplitz matrices to differential equations on graphs: towards a general theory for scalable masked Transformers

Krzysztof Choromanski*, Han Lin*, Haoxian Chen*, Tianyi Zhang, Arijit Sehanobish, Valerii Likhosherstov, Jack Parker-Holder, Tamas Sarlos, Adrian Weller, Thomas Weingarten

[Paper][Code][Poster]

(ICLR 2022) Hybrid Random Features

Krzysztof Choromanski*, Han Lin*, Haoxian Chen*, Yuanzhe Ma*, Arijit Sehanobish*, Deepali Jain, Michael S Ryoo, Jake Varley, Andy Zeng, Valerii Likhosherstov, Dmitry Kalashnikov, Vikas Sindhwani, Adrian Weller

[Paper][Code][Video][Slides]

2021

(Preprint 2021) Graph Kernel Attention Transformers

Krzysztof Choromanski*, Han Lin*, Haoxian Chen*, Jack Parker-Holder

[Paper][Code]

2020

(NeurIPS 2020) Demystifying Orthogonal Monte Carlo and Beyond

Han Lin*, Haoxian Chen*, Tianyi Zhang, Clement Laroche, Krzysztof Choromanski

[Paper][Code][Video]

* Equal contribution.

Teaching Assistants

- COMS 4231 Analysis of Algorithms, Fall 2022, Columbia University

- COMS 4732 Computer Vision 2: Learning, Spring 2022, Columbia University

- COMS 4721 Machine Learning for Data Science, Spring 2022, Columbia University

- QMSS 5073 Machine Learning for Social Science, Fall 2021, Columbia University

- IEOR 4007 Optimization Models & Methods for FE, Fall 2019, Columbia University

- IEOR 4418 Transportation Analytics & Logistics, Spring 2019, Columbia University

Academic Services

- Conference Reviewer: NeurIPS 2022-2024, ICML 2022-2024, ICLR 2024-2025, CVPR 2025

- Conference Volunteer: RSS 2022